Event-Driven Integration with GCP serverless services

In this post, we will go through a scenario where we use Google Cloud Platform (GCP) serverless services to archive Event-Driven model.

The scenario is the Ink Replenishment program, whenever a user buys a printer at a store or online the system will send the user an email to register to the program. If the user decided to opt-in to the program and then the user will automatically receive a suggestion to buy new Ink whenever the ink low. So the user doesn’t have to look up for the Ink code of the printer in order to buy a new one, she just responses back Yes or No to the system to buy a new Ink.

Once the Yes response sent, the system finds the store who sold the printer based on the serial number and forward the order to the store to fulfill it. We will address this order scenario in the event-driven model in this blog.

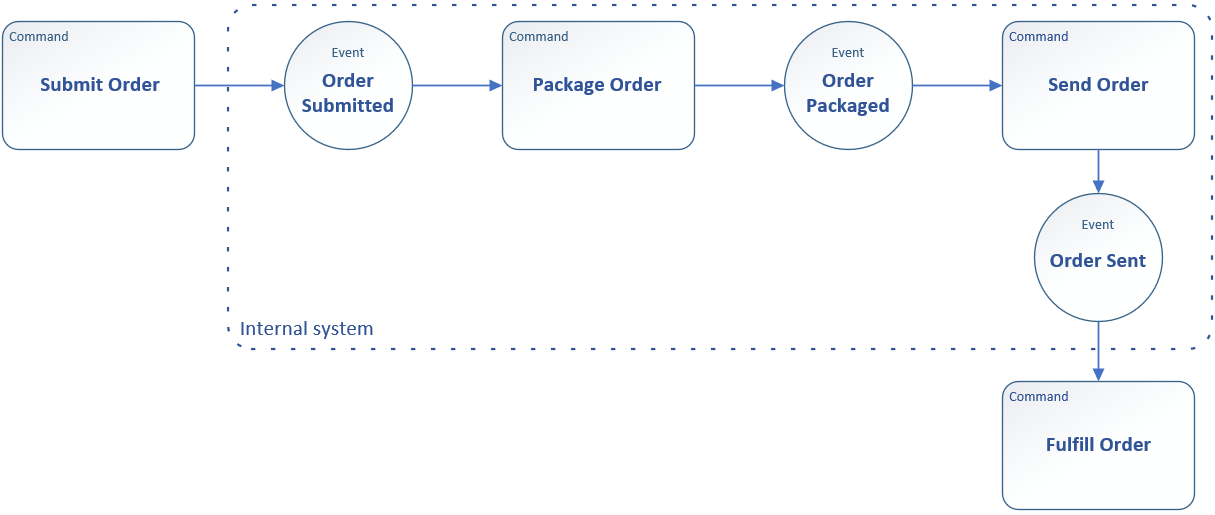

Let says we have a chain of Command and Event as the diagram below.

The very first command is from the customer, she responses YES to trigger the order workflow from her computer/Phone, and the last command is to fulfill the order and it will be taken care from the external stores. There are 2 other commands need to implement in the internal system are Package Order and Send Order.

Before we go to the detail of the implementation, let have a quick explanation about Event the diagram above. We are using Google Cloud Pub/Sub for messaging events, and each event is generated with a specific data object after the command executed. (That is the reason why we don’t see any data object in the diagram except a serial of commands and events)

An example for the event Order Submitted: once the customer confirmed to order the new Inks, the application on the customer laptop/phone will trigger an event and push it to a topic in Cloud Pub/Sub, and then the event will trigger another command which is subscribing to the topic.

{

"customerId": "0000001234",

"serial": "serial number of the printer",

"date": "2019-09-06T14:38:00.000",

"items": [

{

"code": "64",

"color": "Y",

"inklevel": 24

},

{

"code": "64",

"color": "B",

"inklevel": 10

}

]

}

SAP CPI and Advantco GCP Adapter

Now it’s time to think about the implementation commands of Package Order and Send Order. We can use Google Cloud Functions to respond to the events from Cloud Pub/Sub to process, transform and enrich data. So, Cloud Functions is a good glue to connect point to point, specifically as a command in our model to consume data from an event and then generate a new event to trigger another command.

But besides the effort of coding to connect to the back-office such as SAP, Salesforce… which are not in GCP stack. We do have a better and faster option which is SAP CPI, it already has adapters (IDOC, ODATA, SFO…) to connect to those systems.

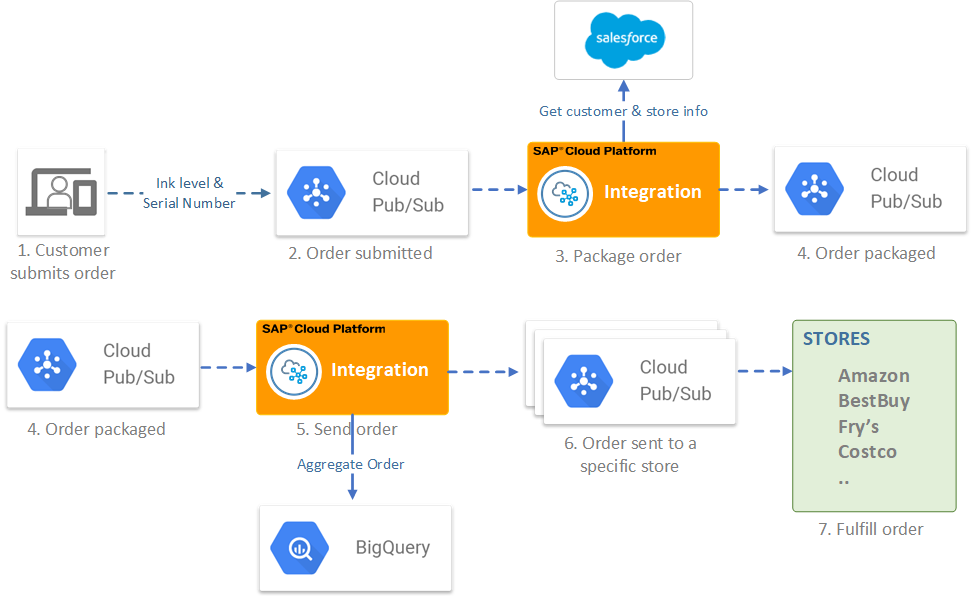

Look at the diagram below where Salesforce is the back office and SAP CPI connecting events with help from Advantco GCP adapter to consume and produce event messages from Google Cloud Pub/Sub.

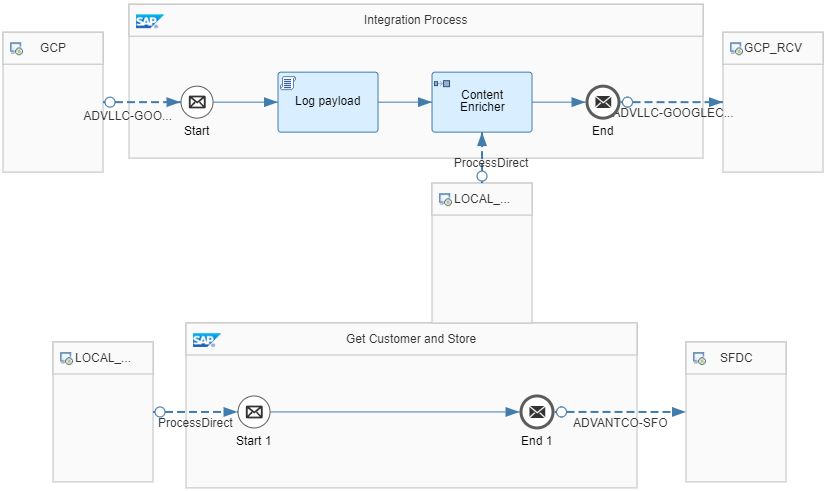

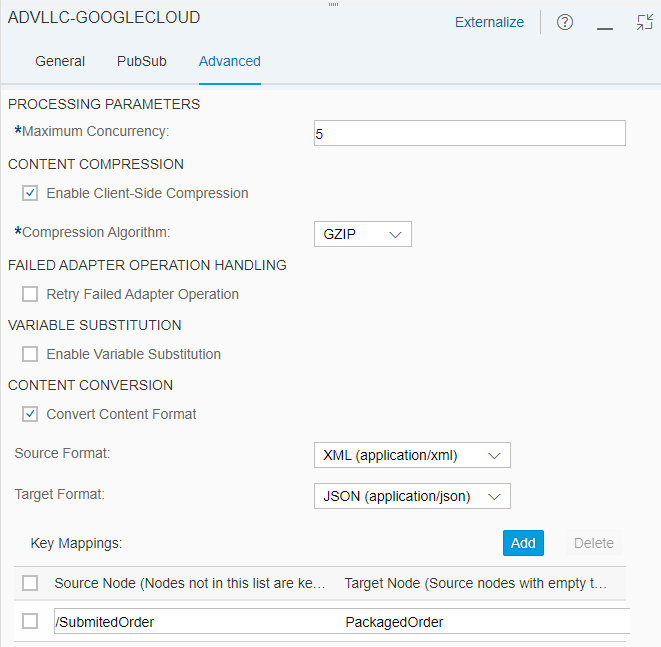

Package Order

The flow of the command Package Order. It makes the flow so much simple with Advantco GCP adapter and SFO (Salesforce) adapter.

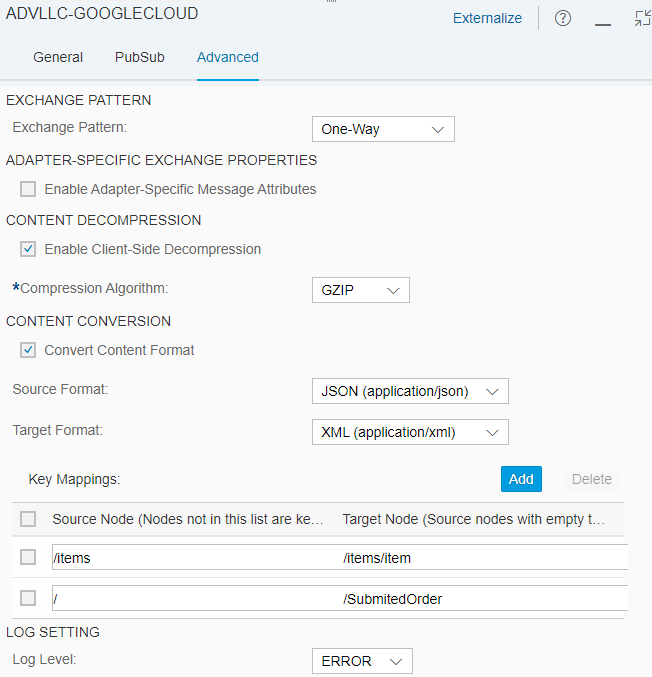

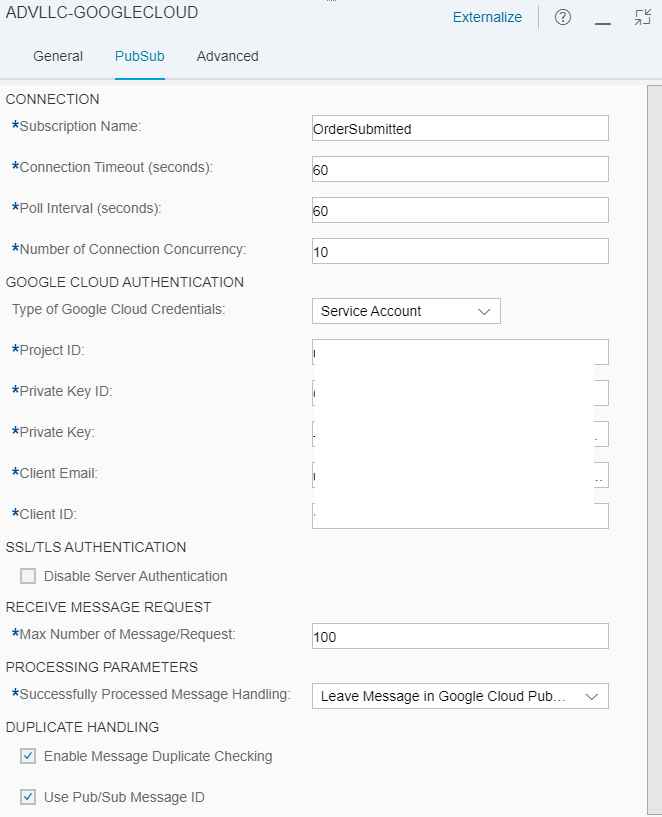

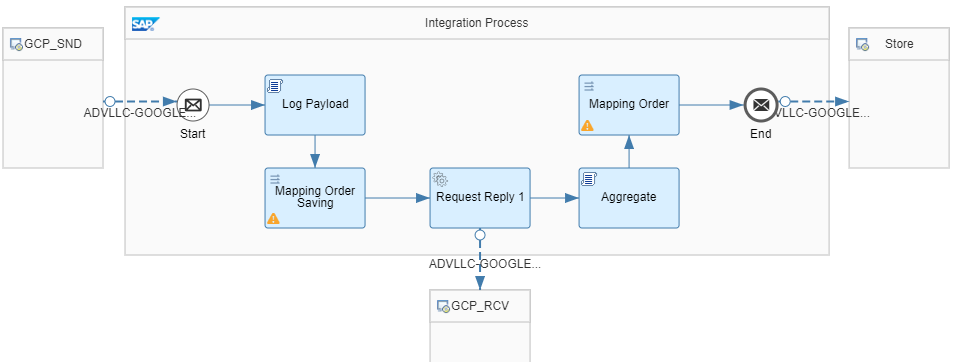

The GCP sender channel, it does some other stuff beside pulling event messages from Cloud Pub/Sub such as uncompressing GZIP message, converting JSON message to XML. It makes the flow clean and simple.

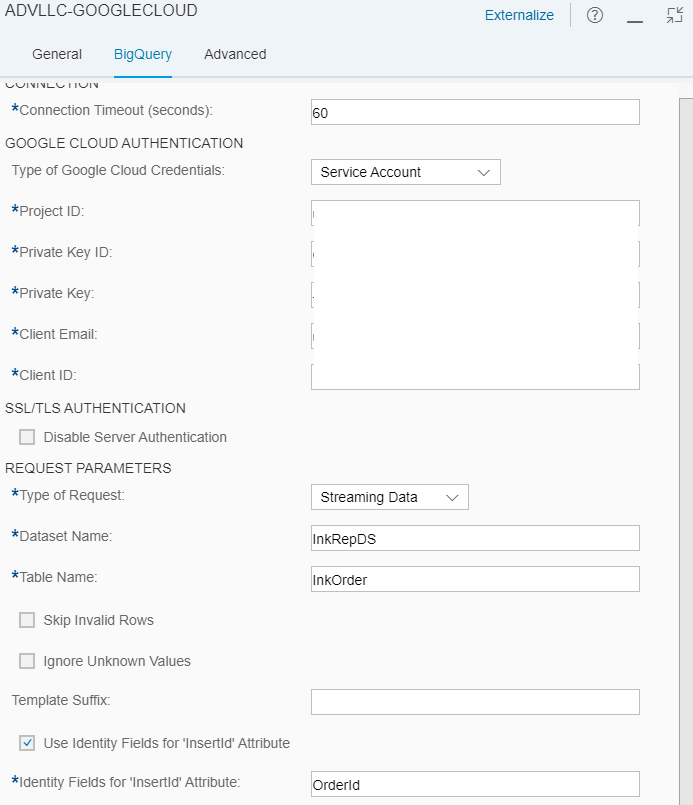

The connection configuration within the adapter supports Service Account and OAuth2, it can open multiple concurrent connections for a high volume of flowing messages.

Content Enricheris a very cool step from SAP CPI, it helps to combine/enrich two data sources to one. Yes, in a single step without Message Mapping. It’s a reason why we converted the SubmittedOrder from JSON to XML in the GCP sender channel above.

Source 1 - a submitted order

<?xml version="1.0" encoding="UTF-8"?>

<SubmitedOrder>

<customerId>0000001238</customerId>

<serial>1234554321</serial>

<date>2019-09-06T14:38:00.000</date>

<items>

<item>

<code>64</code>

<color>Y</color>

<inklevel>24</inklevel>

</item>

<item>

<code>64</code>

<color>B</color>

<inklevel>10</inklevel>

</item>

</items>

</SubmitedOrder>

Source 2 - customer and store info

<?xml version="1.0" encoding="UTF-8"?>

<queryResponse>

<Customer__c>

<id>...</id>

..

<External_CustID__C>0000001238</External_CustID__C>

<Store>

<id>...</id>

..

</Store>

</Customer__c>

</queryResponse>

Target - the combine of Source 1 and Source 2

<?xml version="1.0" encoding="UTF-8"?>

<SubmitedOrder>

<customerId>0000001238</customerId>

<Customer__c>

<id>...</id>

..

<External_CustID__C>0000001238</External_CustID__C>

<Store>

<id>...</id>

..

</Store>

</Customer__c>

<serial>1234554321</serial>

<date>2019-09-06T14:38:00.000</date>

<items>

<item>

<code>64</code>

<color>Y</color>

<inklevel>24</inklevel>

</item>

<item>

<code>64</code>

<color>B</color>

<inklevel>10</inklevel>

</item>

</items>

</SubmitedOrder>

The GCP Receiver channel, it creates a new event after the order packaged by producing an event message to Cloud Pub/Sub.

As an agreement in the order workflow, all event messages are stored in JSON format and compressed with GZIP. So, the GCP receiver channel will do these things to make it uniform.

Send Order

The flow of the command Send Order. Before sending out the order we store it in BigQuery - another serverless service from Google, then we can keep track of the order status as well as analyze and have a statistic in a later time.

Consuming and producing event messages from Cloud Pub/Sub using Advantco GCP adapter is similar steps above, with compress/uncompress GZIP and conversion between XML and JSON.

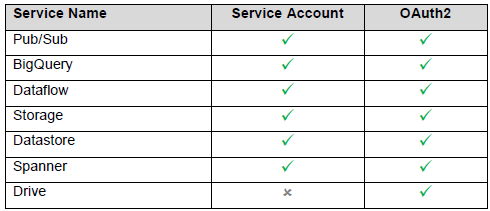

Beside the Cloud Pub/Sub the adapter also supports many other services such as BigQuery, Datastore, Spanner, Dataflow, Storage and Google Drive. Here is the list of services with authentication methods supported by the adapter.

We use BigQuery to store the order in the flow.

Summary

We did not go much detail on the implementation but we see many benefits from the Google serverless services in the project implementation, no worrying of server management, stability, scalability… we can start the project from day one.

And the most important is the Advantco GCP adapter played a key role in this project, it speeded up the implementation with the functions it offers.

References

Advantco GCP Adapter

https://advantco.com/product/adapter/google-cloud-platform-adapter-for-sap-pi-po

Cloud Pub/Sub

https://cloud.google.com/pubsub/